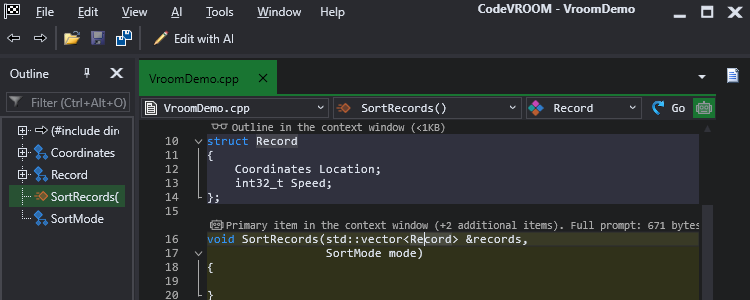

CodeVROOM is very flexible when it comes to language models. It uses customizable templates to build conversations from your requests, code snippets and rules, lets the AI complete them, and presents you the results in a nice reviewable way. Rinse and repeat. You click, CodeVROOM chats with the LLM.

We recommend using CodeVROOM with LLaMA3.3-Instruct/ChatGPT models (cloud) or Devstral/Gemma (locally) and will gradually publish tutorials on more models.

CodeVROOM supports Ollama running locally or on the network, and the following cloud AI providers out-of-the-box:

| Provider | Notes |

|---|---|

| Amazon Bedrock | API key = <access key>:<secret key> |

| Anthropic Cloud | Claude models |

| Cerebras Cloud | Order of magnitude faster than others |

| DeepSeek Cloud | OpenAI-compatible API |

| Google Gemini | OpenAI-compatible API |

| Groq | OpenAI-compatible API |

| Mistral | |

| OpenAI | |

| Together AI | Has a free trial |

| X AI | OpenAI-compatible API |

CodeVROOM can aggressively trim code coming into the context window, which means fewer tokens, lower costs, and faster response times.

You can quickly try out CodeVROOM without signing up to any cloud provider using a small number of evaluation tokens included with every installation. Evaluation tokens are subject to availability and should not be used with production code.